The Extended Reality and Games Lab (XRG Lab), led by College of Information Science professors Lila Boz and Ren Boz, conducts cutting-edge research on extended and virtual reality, including this horizontally flipped interaction in VR for improving spatial ability.

At the Extended Reality and Games Lab (XRG Lab), we conduct research on novel interaction techniques and enhancing extended (virtual, augmented and mixed) reality experiences for improved usability and user experience.

Our work mainly consists of design, development, and evaluation (through empirical user studies) of these interaction techniques and enhanced extended reality experiences.

In general, we engage in work that falls under one of the following larger-scope research questions:

- How can extended reality be enhanced for improving individuals’ lives in terms of better education, health and well-being, training, accessibility, empathy, awareness and entertainment?

- What are the effects of novel interaction techniques on usability and user experience in extended reality?

- How can the boundary between the real and virtual worlds be blurred such that the technology that connects these two worlds becomes seamless, and more intuitive and immersive user experiences are afforded?

- How can video games be leveraged for beneficial purposes, such as healthier lifestyles, increased knowledge, and improved skills?

Our work is mainly driven by curiosity and a desire to push the boundaries in development of innovative and interactive playful technologies. We thrive for contributing to the existing knowledge in this field through peer-reviewed publications and presentations. Graduate and undergraduate students take part in research.

Projects & Publications

Examples from the ongoing research projects at the XRG Lab and publications can be seen below.

Real-Virtual Objects: Exploring Bidirectional Embodied Tangible Interaction in World-Fixed Virtual Reality

Funded by the National Science Foundation (NSF), in this research, we explore bidirectional embodied tangible interaction between a human user and a virtual character in world-fixed virtual reality. The interaction is enabled through shared objects that span the virtual and physical boundary. We investigate the developed interaction’s effects on user experience.

L. Bozgeyikli, "Give Me a Hand: Exploring Bidirectional Mutual Embodied Tangible Interaction in Virtual Reality" in 2021 IEEE International Conference on Consumer Electronics (ICCE), pp. 1-6, 2021. https://repository.arizona.edu/handle/10150/661308

Tangiball: Foot-Enabled Embodied Tangible Interaction with a Ball in Virtual Reality

In this research, we explored how foot-enabled embodied interaction in VR affected user experience through a room-scale tangible soccer game we called Tangiball. We compared the developed interaction with a control version where users played the same game with a completely virtual ball. User study results revealed that tangible interaction improved user performance and presence statistically significantly, while no difference in terms of motion sickness was detected between the tangible and virtual versions.

L. Bozgeyikli and E. Bozgeyikli, "Tangiball: Foot-Enabled Embodied Tangible Interaction with a Ball in Virtual Reality" 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, pp. 812-820, 2022. https://repository.arizona.edu/handle/10150/666233

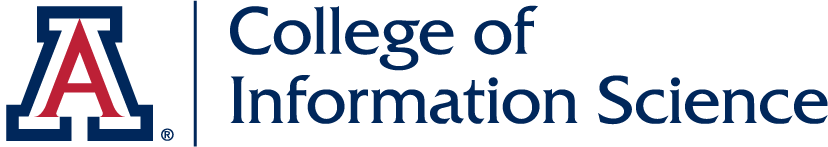

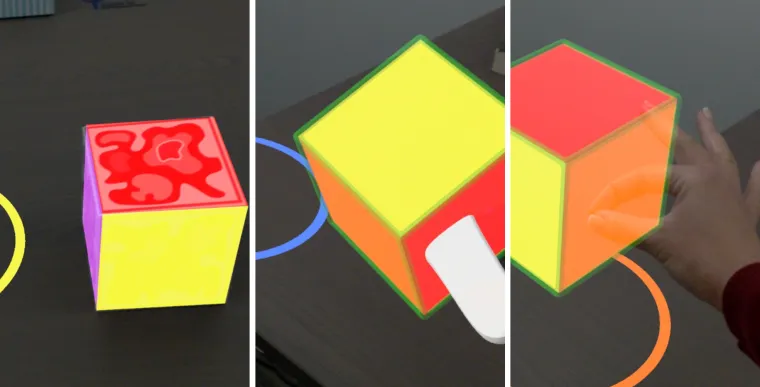

Evaluating Object Manipulation Interaction Techniques in Mixed Reality: Tangible User Interfaces and Gesture

In this research, we investigated effects of three mixed reality interaction techniques on usability and user experience (i.e., gesture-based interaction, controller-based interaction, and a custom tangible object-based interaction where the tangible object had matching geometry with the superimposed projection and was tracked in-real time). The controller and the tangible cube were superior to the gesture method, while being comparable to each other in terms of user experience and performance.

E. Bozgeyikli and L. L. Bozgeyikli, "Evaluating Object Manipulation Interaction Techniques in Mixed Reality: Tangible User Interfaces and Gesture" 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, pp. 778-787, 2021. https://repository.arizona.edu/handle/10150/661292

Googly Eyes: Exploring Effects of Displaying User’s Eye Movements Outward on a VR Head-Mounted Display on User Experience

In this research, we explore how displaying the HMD-wearing user’s eyes outward effects user experience of non-HMD users in a collaborative asymmetrical video game. The main motivation is to increase nonverbal communication cues towards outside users, hence improve communication between HMD and non-HMD users.

E. Bozgeyikli and V. Gomes, “Googly Eyes: Displaying User's Eyes on a Head-Mounted Display for Improved Nonverbal Communication” in Extended Abstracts of the 2022 Annual Symposium on Computer-Human Interaction in Play (CHI PLAY '22), 253-260, 2022. https://dl.acm.org/doi/abs/10.1145/3505270.3558348

Mirrored VR: Exploring Horizontally Flipped Interaction in Virtual Reality for Improving Spatial Ability

In this research, we explore the effects of horizontally flipped (i.e., mirror-reversed) interaction on spatial ability and user experience in virtual reality with a cup stacking task. In the mirror-reversed version, the task is performed using direct manipulation with horizontally flipped controls, similar to looking in a mirror while performing object manipulation in real life. Initial results indicate that horizontally flipped interaction shows a trend toward an improvement in spatial orientation while practicing cup stacking in virtual reality with either control (i.e., regular or mirror-reversed) is beneficial for mental rotation.

L. Bozgeyikli, E. Bozgeyikli, C. Schnell and J. Clark, "Exploring Horizontally Flipped Interaction in Virtual Reality for Improving Spatial Ability" in IEEE Transactions on Visualization and Computer Graphics, vol. 29, no. 11, pp. 4514-4524, 2023. https://repository.arizona.edu/handle/10150/670139

VCR: Virtual Cognitive Rehabilitation Using a Virtual Reality Serious Game for Veterans with a History of TBI

Funded by the Department of Veterans Affairs and conducted in collaboration with the College of Medicine Phoenix, in this research, we aim to provide cognitive rehabilitation to individuals with a history of traumatic brain injury through a virtual reality serious game. Users explore five novel virtual environments with randomly generated spots and try to complete destination finding tasks in several levels with gradually increasing difficulty.

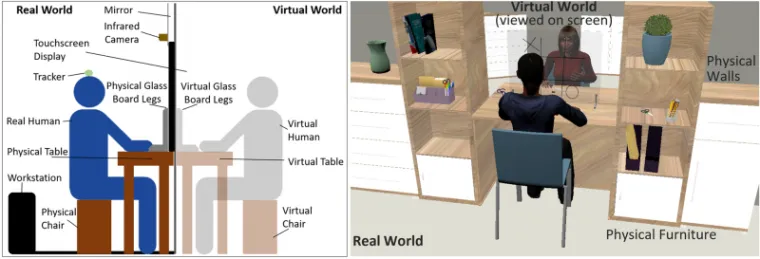

Tic-Tac-Toe on a Two-Sided Glass Board: Non-Verbal Mutual Interaction with a Virtual Human through a Shared Real-World Object

In this research, we explore non-verbal interaction between a real-human and a virtual avatar through a novel virtual reality setup, which includes a game of tic-tac-toe that is played on a shared tangible glass board interface in real-time.